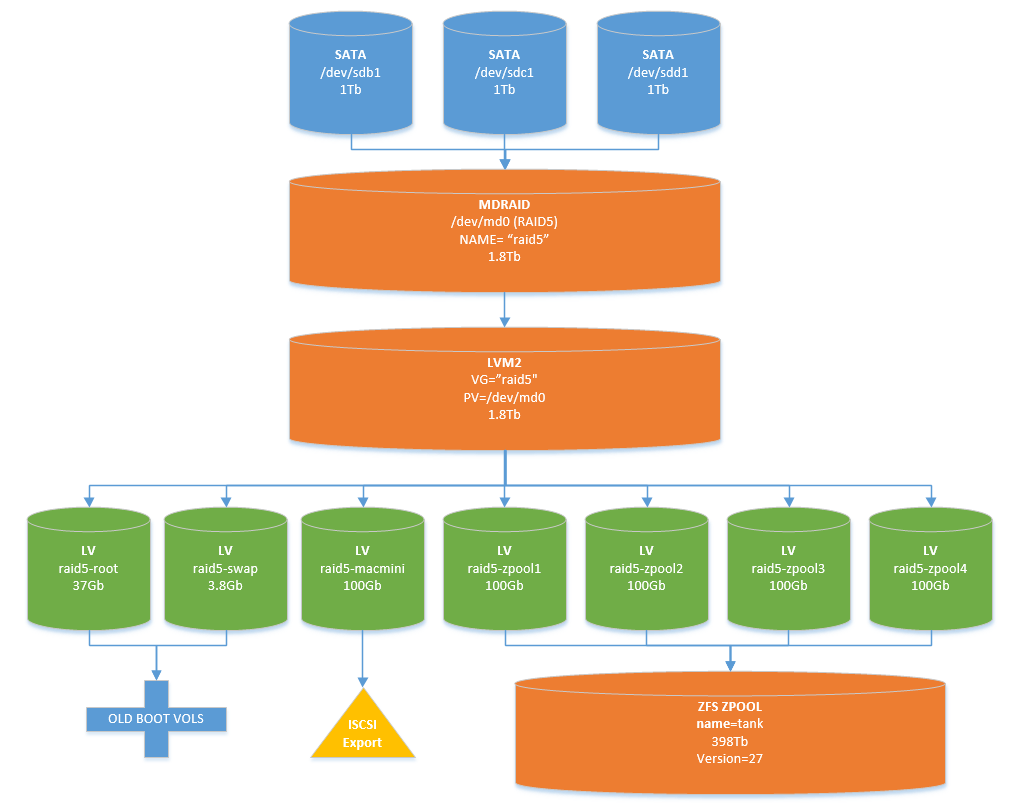

Found a set of disk in my pile that came out of a Buffalo NAS

root@debian:~# mdadm --detail /dev/md127

/dev/md127:

Version : 1.2

Creation Time : Thu May 28 11:42:20 2015

Raid Level : raid5

Array Size : 1953259520 (1862.77 GiB 2000.14 GB)

Used Dev Size : 976629760 (931.39 GiB 1000.07 GB)

Raid Devices : 3

Total Devices : 3

Persistence : Superblock is persistent

Intent Bitmap : Internal

Update Time : Thu Oct 3 01:21:52 2019

State : active

Active Devices : 3

Working Devices : 3

Failed Devices : 0

Spare Devices : 0

Layout : left-symmetric

Chunk Size : 512K

Consistency Policy : bitmap

Name : buffalo:0

UUID : 817c66c9:ab5aba3d:46cf3be0:c46fd224

Events : 11591

Number Major Minor RaidDevice State

0 8 17 0 active sync /dev/sdb1

1 8 49 1 active sync /dev/sdd1

2 8 33 2 active sync /dev/sdc1

root@debian:~# pvdisplay /dev/md127

--- Physical volume ---

PV Name /dev/md127

VG Name raid5

PV Size <1.82 TiB / not usable 4.00 MiB

Allocatable yes

PE Size 4.00 MiB

Total PE 476869

Free PE 338357

Allocated PE 138512

PV UUID 2UPreL-vdQI-NdXn-xfhR-BxgN-FADg-UpYjVn

root@debian:~# vgdisplay raid5

--- Volume group ---

VG Name raid5

System ID

Format lvm2

Metadata Areas 1

Metadata Sequence No 9

VG Access read/write

VG Status resizable

MAX LV 0

Cur LV 7

Open LV 5

Max PV 0

Cur PV 1

Act PV 1

VG Size <1.82 TiB

PE Size 4.00 MiB

Total PE 476869

Alloc PE / Size 138512 / 541.06 GiB

Free PE / Size 338357 / 1.29 TiB

VG UUID ADkPZw-R27s-6FUZ-3LW7-JUOV-oPyW-55pWu4

root@debian:~# lvdisplay raid5

--- Logical volume ---

LV Path /dev/raid5/root

LV Name root

VG Name raid5

LV UUID CRKUp4-a5lj-QeI2-mdiX-ifxY-3OH5-wVWGOw

LV Write Access read/write

LV Creation host, time buffalo, 2015-05-28 11:50:46 +1000

LV Status available

# open 1

LV Size 37.25 GiB

Current LE 9536

Segments 1

Allocation inherit

Read ahead sectors auto

- currently set to 4096

Block device 253:2

--- Logical volume ---

LV Path /dev/raid5/swap

LV Name swap

VG Name raid5

LV UUID iGclU8-Fm3T-GSgD-L8AP-UJDk-Y5H3-iiQmWo

LV Write Access read/write

LV Creation host, time buffalo, 2015-05-28 11:51:00 +1000

LV Status available

# open 0

LV Size 3.81 GiB

Current LE 976

Segments 1

Allocation inherit

Read ahead sectors auto

- currently set to 4096

Block device 253:3

--- Logical volume ---

LV Path /dev/raid5/zpool1

LV Name zpool1

VG Name raid5

LV UUID clahFF-RXYF-QsQ3-xBgG-rMa9-y0Ac-8zDdit

LV Write Access read/write

LV Creation host, time buffalo, 2015-05-28 13:36:22 +1000

LV Status available

# open 1

LV Size 100.00 GiB

Current LE 25600

Segments 1

Allocation inherit

Read ahead sectors auto

- currently set to 4096

Block device 253:4

--- Logical volume ---

LV Path /dev/raid5/zpool2

LV Name zpool2

VG Name raid5

LV UUID 3v95G3-Uev9-V3LI-PyYt-WzXu-kdch-fNMsjP

LV Write Access read/write

LV Creation host, time buffalo, 2015-05-28 13:36:26 +1000

LV Status available

# open 1

LV Size 100.00 GiB

Current LE 25600

Segments 1

Allocation inherit

Read ahead sectors auto

- currently set to 4096

Block device 253:5

--- Logical volume ---

LV Path /dev/raid5/zpool3

LV Name zpool3

VG Name raid5

LV UUID y4McZn-hLW3-jQR2-Pzkz-9QEc-Jd5p-TZ9idI

LV Write Access read/write

LV Creation host, time buffalo, 2015-05-28 13:36:29 +1000

LV Status available

# open 1

LV Size 100.00 GiB

Current LE 25600

Segments 1

Allocation inherit

Read ahead sectors auto

- currently set to 4096

Block device 253:6

--- Logical volume ---

LV Path /dev/raid5/zpool4

LV Name zpool4

VG Name raid5

LV UUID DOO9th-5B32-9kX6-NkwG-4Dbx-AKcN-83XiAT

LV Write Access read/write

LV Creation host, time buffalo, 2015-05-28 13:36:32 +1000

LV Status available

# open 1

LV Size 100.00 GiB

Current LE 25600

Segments 1

Allocation inherit

Read ahead sectors auto

- currently set to 4096

Block device 253:7

--- Logical volume ---

LV Path /dev/raid5/macmini

LV Name macmini

VG Name raid5

LV UUID XL6d9V-SGfH-6m16-WH8E-JobI-oGn9-HuGDjL

LV Write Access read/write

LV Creation host, time buffalo, 2015-06-01 13:46:25 +1000

LV Status available

# open 0

LV Size 100.00 GiB

Current LE 25600

Segments 1

Allocation inherit

Read ahead sectors auto

- currently set to 4096

Block device 253:8

Upgrading ZFS/zPool

Disks are now in a Debian 10 machine and the original version was v4 ZFS and v23 zPool created via ” zfs-fuse 0.7.2 “

ZFS

root@debian:~# zfs list

NAME USED AVAIL REFER MOUNTPOINT

tank 57.3G 328G 57.3G /tank

root@debian:~# zfs get version

NAME PROPERTY VALUE SOURCE

tank version 4 -

root@debian:/# zfs upgrade

This system is currently running ZFS filesystem version 5.

The following filesystems are out of date, and can be upgraded. After being

upgraded, these filesystems (and any 'zfs send' streams generated from

subsequent snapshots) will no longer be accessible by older software versions.

VER FILESYSTEM

--- ------------

4 tank

root@debian:/# zfs upgrade tank

1 filesystems upgraded

zPool

root@debian:~# zpool get version

NAME PROPERTY VALUE SOURCE

tank version 23 local

root@debian:~# zpool list tank

NAME SIZE ALLOC FREE EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT

tank 398G 73.2G 325G - - 18% 1.00x ONLINE -

root@debian:~# zpool status

pool: tank

state: ONLINE

status: The pool is formatted using a legacy on-disk format. The pool can

still be used, but some features are unavailable.

action: Upgrade the pool using 'zpool upgrade'. Once this is done, the

pool will no longer be accessible on software that does not support

feature flags.

scan: none requested

config:

NAME STATE READ WRITE CKSUM

tank ONLINE 0 0 0

zpool1 ONLINE 0 0 0

zpool2 ONLINE 0 0 0

zpool3 ONLINE 0 0 0

zpool4 ONLINE 0 0 0

errors: No known data errors

root@debian:/# zpool upgrade tank

This system supports ZFS pool feature flags.

Successfully upgraded 'tank' from version 23 to feature flags.

Enabled the following features on 'tank':

async_destroy

empty_bpobj

lz4_compress

multi_vdev_crash_dump

spacemap_histogram

enabled_txg

hole_birth

extensible_dataset

embedded_data

bookmarks

filesystem_limits

large_blocks

large_dnode

sha512

skein

edonr

userobj_accounting

root@debian:/# zpool get version

NAME PROPERTY VALUE SOURCE

tank version - default

root@debian:/# zpool status

pool: tank

state: ONLINE

scan: none requested

config:

NAME STATE READ WRITE CKSUM

tank ONLINE 0 0 0

zpool1 ONLINE 0 0 0

zpool2 ONLINE 0 0 0

zpool3 ONLINE 0 0 0

zpool4 ONLINE 0 0 0

errors: No known data errors